Electricity is emerging as the primary constraint for AI data center growth. By 2026, the development pipeline will materialize on the grid, shifting limiting factors from semiconductor supply and construction to firm power availability, interconnection capacity, and the infrastructure linking megawatts to server racks.

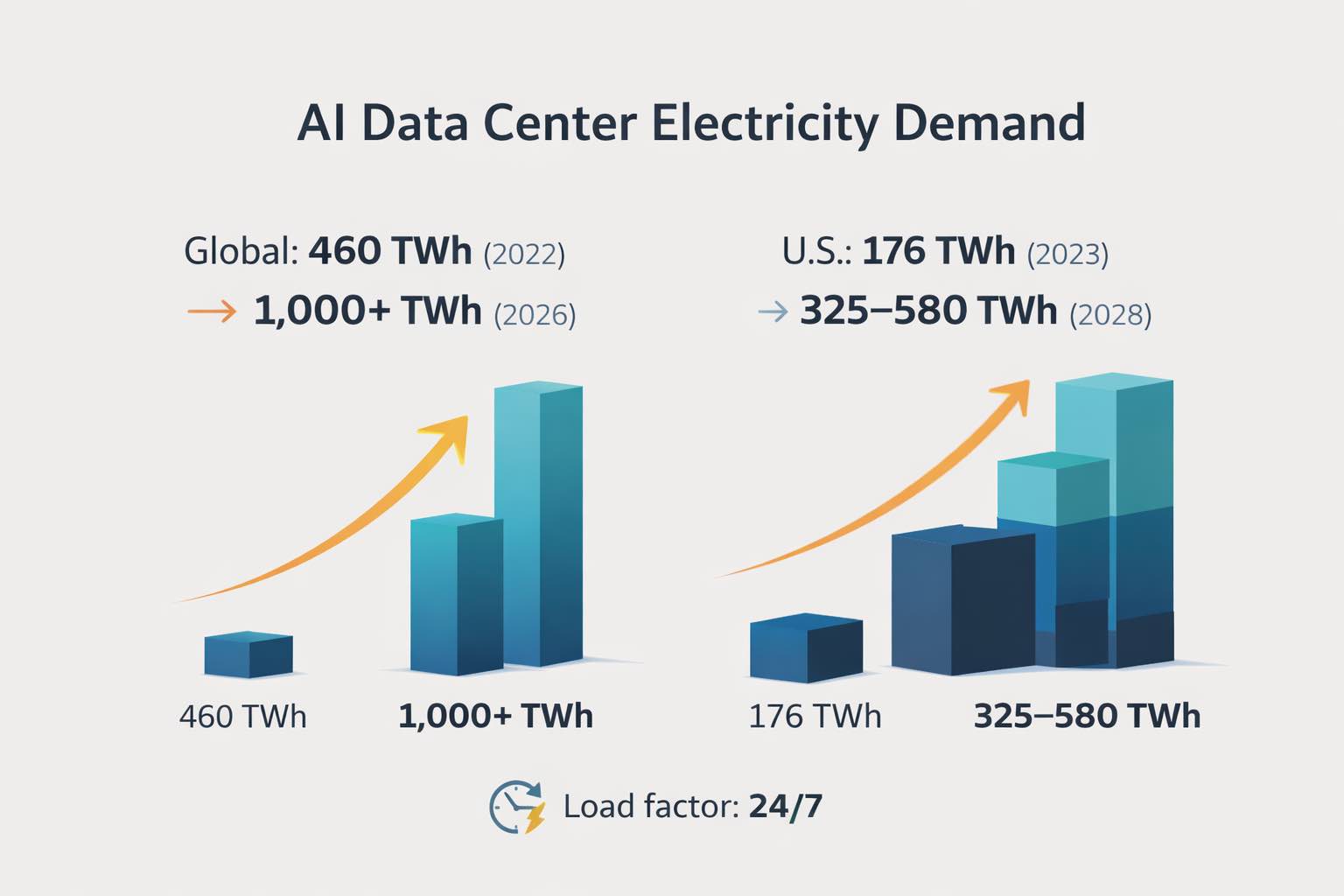

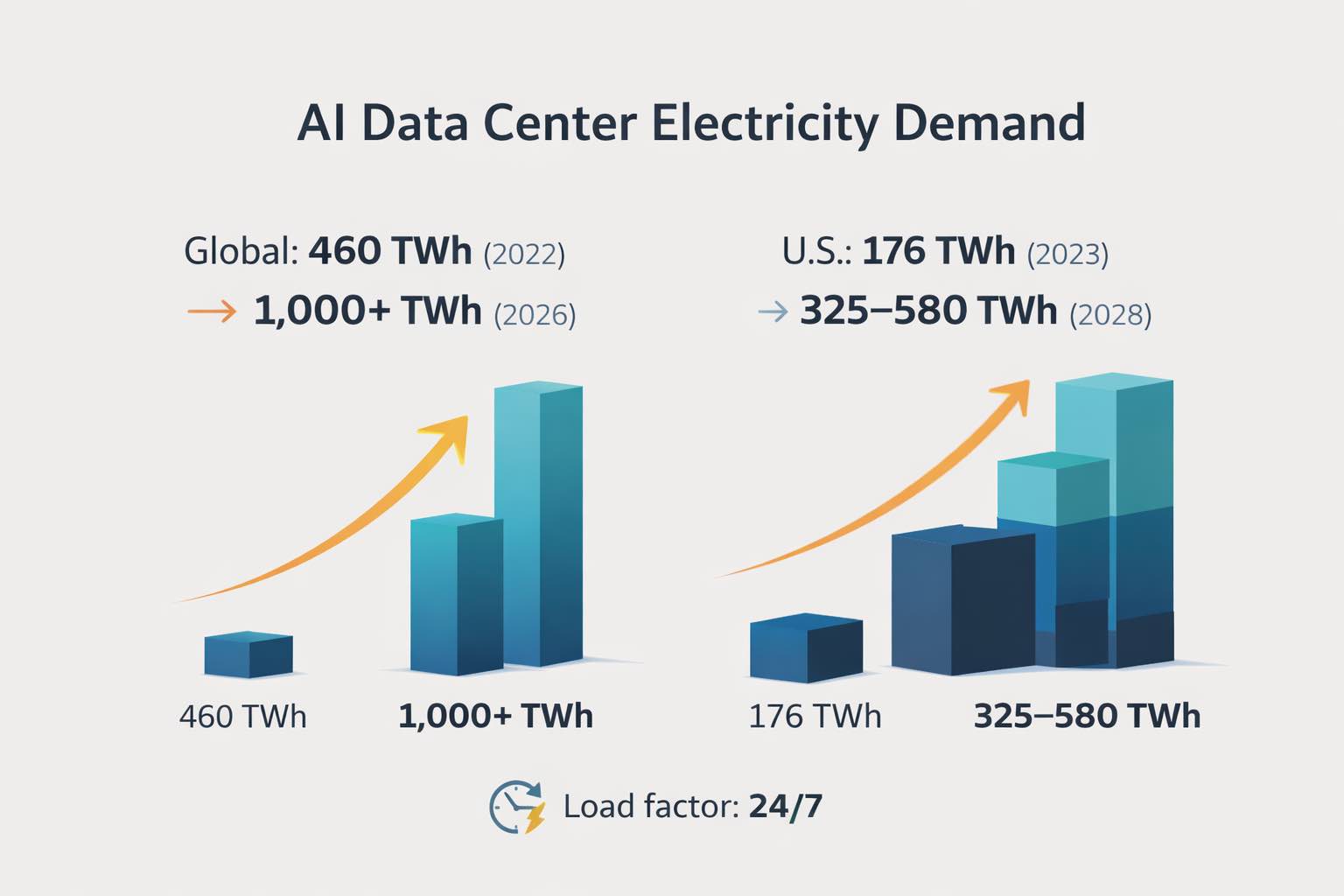

Global data center electricity use is projected to rise from about 460 TWh in 2022 to more than 1,000 TWh by 2026. In the United States, data centers consumed about 176 TWh in 2023, roughly 4.4% of total electricity use, with projections of 325–580 TWh by 2028, or about 6.7%-12% of total electricity use, depending on buildout and efficiency.

AI Data Center Electricity Demand: 2026 Key Takeaways

The year 2026 will present a deliverability shock rather than a nationwide energy shortage. The primary constraints are local, involving substations, transformers, transmission corridors, and interconnection queues concentrated in select high-growth regions.

The core challenge is the 'always-on' load profile. Data centers maintain high load factors, which place sustained pressure on fuel supply, capacity margins, and reliability reserves for significantly more hours annually compared to traditional industrial demand.

PJM represents the most pronounced pressure point in the United States. Planning analyses support up to approximately 30 GW of data center load growth between 2025 and 2030, a scale sufficient to transform capacity planning, procurement strategies, and infrastructure investment.

Texas serves as a case study in volatility. Large-load interconnection requests have exceeded 233 GW, with over 70% associated with data centers, prompting the implementation of stricter rules regarding interconnection, curtailment, and reliability obligations.

Ireland demonstrates the rapid pace at which digital demand can dominate an electricity grid. In 2024, data centers consumed 6,969 GWh, accounting for 22% of metered electricity consumption. This underscores how swiftly a concentrated compute cluster can escalate into a system-level challenge.

The physical requirements of facilities are evolving. AI data centers built around 2026 are trending toward rack densities of 100 kW or higher and increasingly require liquid cooling. These changes elevate power quality requirements and intensify stress on local distribution infrastructure.

Firm power availability is emerging as a key locational advantage. Factors such as inexpensive land or tax incentives are becoming less significant compared to secure interconnection, upgrade capacity, and a reliable pathway to firm energy within constrained timelines.

Why 2026 Hits Differently: Load Factor Meets The Build-Time Wall

Power systems are capable of managing brief and predictable demand peaks. However, they face significant challenges when confronted with large, near-continuous loads. This persistent demand, characteristic of AI data centers, causes electricity consumption to increase more rapidly than peak demand. As a result, planners must secure additional capacity, fuel deliverability, transmission capability, and operating reserves for extended periods.

Recent utility forecasts indicate a five-year nationwide summer peak growth of 166 GW, with approximately 90 GW attributable to data centers. This concentration suggests that the forthcoming demand cycle will not be broadly distributed among households or small businesses. Instead, it will be concentrated within a sector that expands by campus-scale increments, resulting in discrete surges that place significant strain on local networks.

Project timing further accelerates these challenges. Several large-scale projects are scheduled for 2026 and 2027, with tracked campuses collectively representing nearly 30 GW of demand. When such a substantial load is concentrated within a limited geographic area, the grid cannot evenly distribute the impact. Instead, local repricing occurs through congestion, upgrade obligations, and an increasing premium on deliverable megawatts.

Where The Power Constraint Bites First

PJM: the epicenter of U.S. data center load growth

PJM stands out for combining dense population, existing transmission corridors, and a rapidly expanding data center footprint. Planning work supports up to ~30 GW of data center growth from 2025 to 2030. PJM is also estimated to account for roughly 40% of data centers in 2025, making the region a leading indicator of how capacity procurement, queue reform, and cost allocation evolve under stress.

The economic implications are direct. In a capacity-constrained market, each additional dependable megawatt becomes a scarce asset. This scarcity influences contract pricing, alters the comparative economics of peaking versus baseload generation, and accelerates investment in substations and transmission upgrades.

Texas: interconnection queues become governance

Texas exemplifies the interconnection queue challenge. Large-load interconnection requests have surged to over 233 GW, with more than 70% related to data centers. While not all requests result in completed projects, the existence of such a queue presents a governance dilemma. Planners must balance the risks of overbuilding for projects that may not materialize against underbuilding, which could jeopardize reliability and lead to price volatility if projects proceed.

Texas also illustrates an emerging reality for AI data centers: grid access now entails increasing obligations. Curtailment agreements, operational coordination, and demonstrable deliverability are transitioning from optional features to fundamental requirements as large-scale loads proliferate.

Ireland: a case study in grid saturation speed

Ireland provides a clear example of the effects when data centers become a macro-level load. In 2024, metered data center consumption increased to 6,969 GWh, representing 22% of total metered electricity use. When a single sector commands such a significant share, grid investment transitions from a cyclical to a continuous process, necessitating ongoing reinforcement of network assets, additional firm capacity, and stricter regulations regarding connection timing and demand management.

The primary lesson is not that all markets will mirror Ireland's experience. Rather, concentrated compute demand can fundamentally alter a grid’s planning paradigm within a few years, particularly when new loads are clustered near constrained nodes.

Snapshot table: the 2026 constraint map

| Region |

2026-era stress signal |

What it implies |

| PJM |

Up to ~30 GW data center growth (2025–2030) |

Capacity procurement and transmission upgrades become gating factors for projects |

| ERCOT (Texas) |

>233 GW large-load requests; over 70% from data centers |

Interconnection queue reform, curtailment rules, and reliability pricing pressure |

| Ireland |

6,969 GWh in 2024; 22% of metered electricity consumption |

Grid saturation drives tighter connection policy and faster firm capacity decisions |

| U.S. overall |

176 TWh (2023) to 325–580 TWh projected by 2028 |

Data centers become a material driver of national load growth and system planning |

The Economics Of The 2026 Power Shock: Megawatts Become A Scarce Asset

A critical yet often overlooked aspect of the AI cycle is its direct monetization of electricity. For many operators, each delivered megawatt functions as a production line. Without access to firm power, GPU fleets may remain underutilized or fail to be deployed, making interconnection and deliverability significant competitive advantages.

This dynamic reshapes bargaining power across the stack:

Utilities and grid operators gain leverage through queue timing, upgrade requirements, and tariff design because they control the scarce permissioned MW.

Dispatchable generation and fuel logistics return to the strategic core because round-the-clock demand cannot be met reliably without firming, storage, or both.

Transmission, substations, and equipment become hard bottlenecks. When permitting and transformer lead times lag demand growth, scarcity manifests as congestion, higher upgrade costs, and delayed energization.

What Breaks The Shock: Four Practical Pathways Scaling In 2026

1) Make computing flexible, not just efficient

The grid does not need data centers to disappear at peak. It needs them to become predictable and controllable. The strategic play is “compute as a controllable load”:

contracted curtailment blocks with clear performance rules

workload shifting across regions and time windows

on-site batteries to ride through short peak periods

pricing structures that reward flexibility, the way capacity markets reward dependable generation

Flexibility reduces overall system costs by decreasing the volume of firm capacity required to address only a limited number of peak demand hours.

2) Co-located generation and on-site power move from niche to mainstream

An increasing proportion of large projects are integrating data centers with on-site or adjacent generation. This approach is primarily intended to mitigate project timeline risks rather than to disconnect from the grid. On-site power enables staged energization, supports ramp periods, and provides grid services during ongoing infrastructure upgrades.

For AI operators, the principal value lies in increased optionality. Securing firm power supply from the outset safeguards utilization economics and diminishes reliance on uncertain interconnection queue schedules.

3) Cooling and power delivery become first-order constraints

AI-driven workloads are transforming facility design parameters. Rack densities approaching 100 kW are prompting the adoption of liquid cooling and more robust electrical distribution systems. Some liquid immersion cooling configurations operate at approximately 100 kW per rack, with certain deployments reaching up to 150 kW per rack. While higher density can reduce the physical footprint for a given compute target, it also concentrates electrical and thermal loads, thereby increasing power quality requirements and exposing vulnerabilities in local distribution systems.

4) Procurement shifts from “cheapest kWh” to “bankable firm power”

Large compute clusters are moving procurement priorities from nominal energy price toward deliverability and firmness. The winning portfolios tend to combine:

long-duration contracts that prioritize reliable delivery at the node

renewables paired with firming resources and storage

localized capacity-style arrangements that fund new generation and wires where the load lands

The practical outcome is an increase in the total power cost in constrained regions, accompanied by a reduced risk of commissioning delays.

Macro And Market Implications That Matter In 2026

Utilities are selectively reemerging as growth assets. In jurisdictions where regulators permit cost recovery and efficient upgrade allocation, high-load-factor demand expands the rate base through investments in transmission, substations, and generation. Conversely, when cost allocation becomes politicized or unpredictable, project risk increases and capital deployment slows.

Gas and nuclear power are regaining strategic importance. Irrespective of long-term decarbonization objectives, near-term reliability requirements are driving systems toward additional firm capacity and life extensions to support continuous, 24/7 load profiles.

Grid hardware availability and permitting timelines constitute the primary bottlenecks. When demand increases faster than the delivery of transmission lines and transformers, scarcity premiums manifest as congestion, upgrade costs, and capacity pricing. The 2026 power shock marks the first cycle in which these premiums become evident across multiple regions simultaneously.

Policy friction intensifies as data centers become significant contributors to utility bills. Once data centers materially affect local electricity rates, policy debates focus on the allocation of upgrade costs, reliability obligations for large loads, and the pricing of land, water, and power for hyperscale developments.

Frequently Asked Questions (FAQ)

1. What is the “2026 power demand shock” for AI data centers?

It is the point where AI data center pipelines translate into large, persistent electricity demand that outpaces local interconnection and upgrade timelines. The stress shows up in substations, transformers, and delivery, not in a national energy shortage.

2. How big is global data center electricity demand by 2026?

Global data center electricity use is projected to increase from about 460 TWh in 2022 to more than 1,000 TWh by 2026, a jump large enough to force grid planners to treat data centers as a macro demand category.

3. How large is U.S. data center electricity use, and where is it heading?

U.S. data centers consumed about 176 TWh in 2023, accounting for about 4.4% of total electricity use. Projections imply 325–580 TWh by 2028, which can raise the share to roughly 6.7%–12% depending on efficiency and buildout pace.

4. Why is PJM so central to the data center power story?

PJM supports up to ~30 GW of data center load growth between 2025 and 2030, representing roughly ~40% of data centers in 2025. That concentration makes the region a leading indicator for capacity and queue dynamics.

5. Why is Texas such an important case study?

Texas has more than 233 GW of large-load requests, with over 70% tied to data centers. That scale forces rule changes around interconnection, curtailment, and reliability obligations, and it exposes how queues shape market outcomes.

6. Why are AI data centers adopting liquid cooling and on-site generation?

Rack densities near 100 kW increasingly require liquid cooling and stronger internal power distribution. On-site or co-located generation reduces the risk that multi-year grid upgrades delay energization and supports reliable commissioning during ramp periods.

7. Will the power constraint slow AI buildouts or mostly raise costs?

It will do both. In constrained regions, power availability can delay commissioning, force relocation, or require costly upgrades. As projects proceed, the all-in cost rises due to interconnection expenses, firming requirements, and reliability requirements.

Conclusion

The 2026 power demand shock represents a convergence of disparate timelines. AI campuses are constructed within 18 to 24 months, whereas grid upgrades, permitting, and equipment procurement require several years. In the near term, successful operators will be those who approach electricity as strategic infrastructure, prioritizing secure interconnection, workload flexibility, firm power arrangements, and thermal designs optimized for high-density racks.

In the AI era, deliverable megawatts, rather than compute capacity, constitute the most constrained asset.

Disclaimer: This material is for general information purposes only and is not intended as (and should not be considered to be) financial, investment, or other advice on which reliance should be placed. No opinion given in the material constitutes a recommendation by EBC or the author that any particular investment, security, transaction, or investment strategy is suitable for any specific person.