Nvidia is transitioning from a deployment-linked infrastructure framework with OpenAI to a more straightforward approach involving a direct equity investment of approximately $30 billion. This strategic shift alters the market’s evaluation criteria from the capacity Nvidia can finance to the extent of OpenAI’s platform economics that Nvidia can capture, while maintaining Nvidia’s central role in advanced computing.

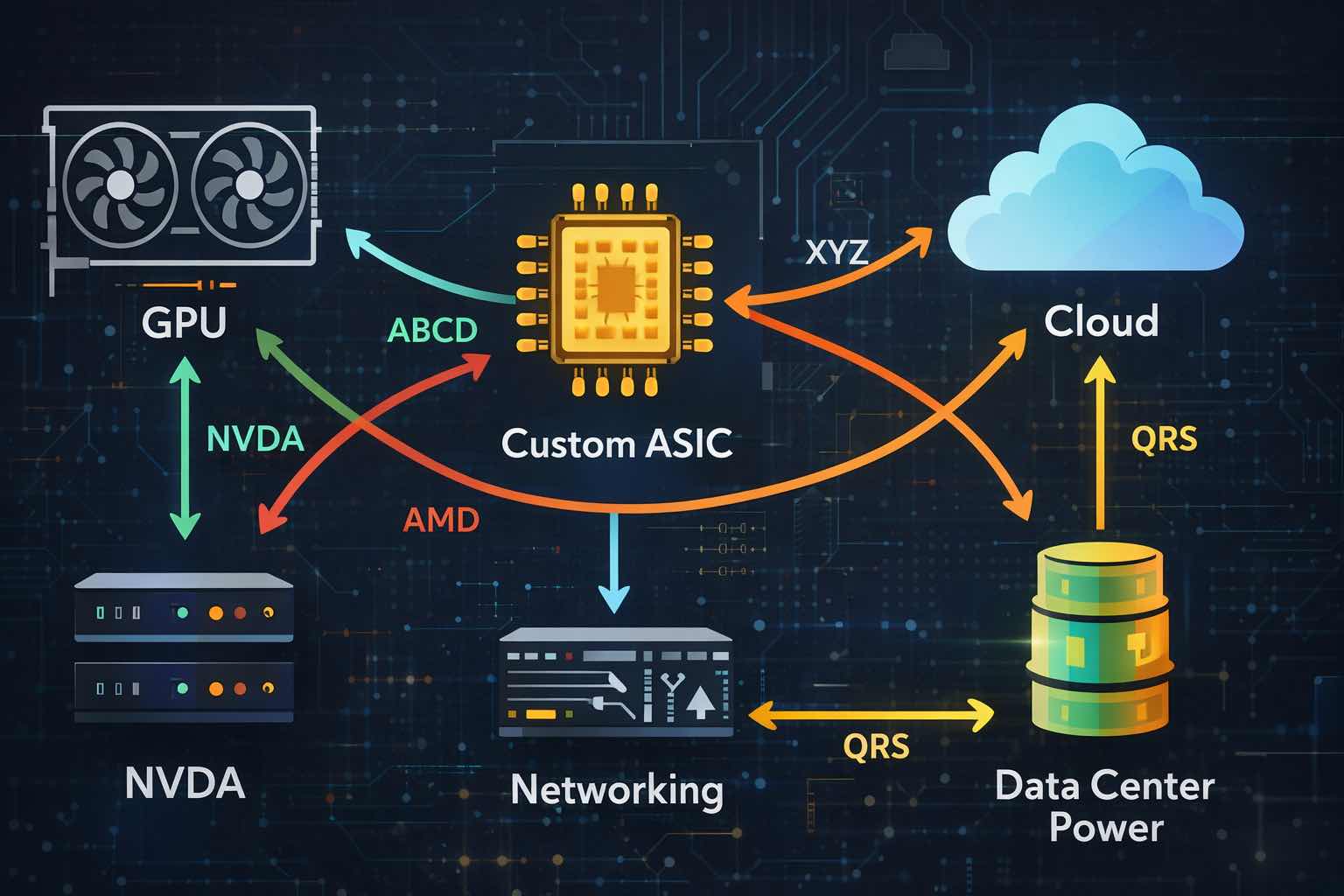

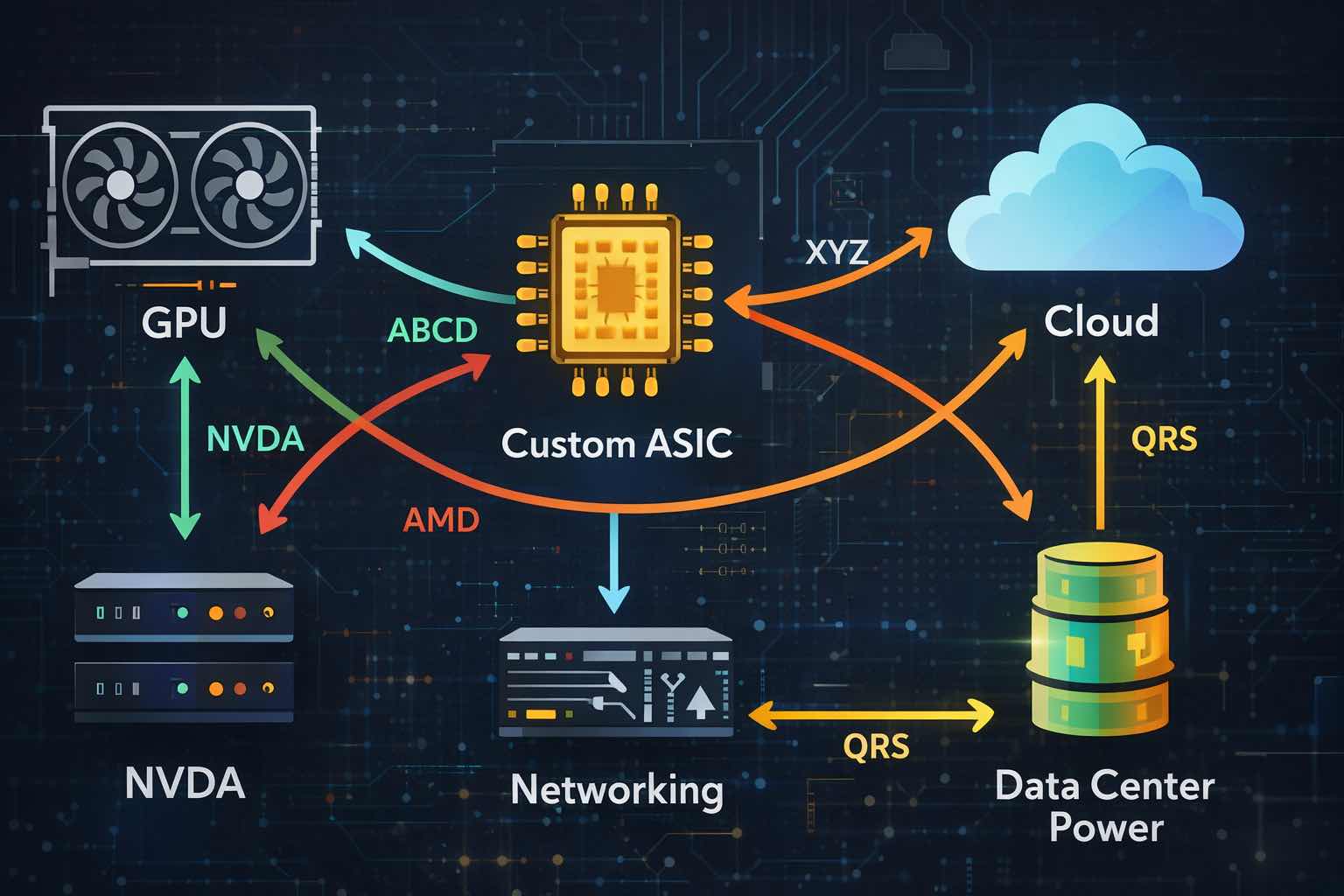

This transition coincides with a period in which investors are reassessing the capital expenditure cycle for artificial intelligence. OpenAI is constructing a multi-supplier technology stack that includes GPUs, custom accelerators, and networking, while distributing workloads across several cloud providers. This broadens the range of opportunities for technology equities, but also necessitates more rigorous analysis of project timelines, profit margins, and capital requirements.

NVIDIA OpenAI Deal Restructuring Data Table

Item |

Amount / Scale |

Timing |

Why It Matters For Stocks |

| NVIDIA’s New OpenAI Stake |

~$30B equity investment |

Reported as near-term, inside a broader round expected to exceed $100B |

Cleaner structure, less balance-sheet linkage to data center construction; turns “OpenAI exposure” into valuation upside and downside |

| The Earlier Framework |

Up to $100B, staged by deployment |

Announced in September 2025 as a letter of intent |

Would have blended financing and procurement, increasing circular-funding optics and execution uncertainty |

| NVIDIA Systems Planned For OpenAI |

At least 10 GW (millions of GPUs) |

First 1 GW targeted for 2H 2026 on Vera Rubin |

Confirms scale, but realized revenue depends on deployment pacing |

| AMD OpenAI Partnership |

6 GW of AMD Instinct GPUs |

First 1 GW targeted for 2H 2026 |

Introduces a credible second supplier and pressures pricing over time |

| Broadcom Custom Accelerator Program |

10 GW of OpenAI-designed accelerators plus Broadcom Ethernet |

Deployments start 2H 2026; complete by end 2029 |

Expands the custom silicon lane and elevates AI networking spend |

| OpenAI Oracle Cloud Commitment |

$300B of compute over five years |

Announced 2025 |

A rare “needle-moving” contract for cloud capacity providers and the data center supply chain |

| OpenAI Infrastructure Ambition |

About $600B through 2030 |

Multi-year |

Keeps the sector’s capex floor high, while raising the odds of “digest” phases |

These figures reflect announced partnerships and the current reported deal restructuring context.

For context, NVDA traded near $188, AMD near $203, AVGO near $334, ORCL near $157, and MSFT near $398 as of February 20, 2026.

Why Nvidia Chose Equity Over Infrastructure Tranches

An investment schedule based on gigawatt increments links capital deployment to construction, permitting, power delivery, and procurement timelines. In contrast, a direct equity stake limits the total investment, mitigates exposure to project delays, and maintains flexibility should model economics evolve toward more efficient training or lower-cost inference hardware.

This approach also enhances transparency by reducing perceptions that chip revenue is being recycled through closely connected financing arrangements.

What The Pivot Signals About OpenAI’s Procurement Strategy

OpenAI’s agreements introduce competition among suppliers based on both cost and speed of capacity delivery. Nvidia continues to be a preferred partner for compute and networking within the 10 GW plan, with initial deployments scheduled for the second half of 2026. Concurrently, OpenAI has committed to 6 GW with AMD and is developing a 10 GW custom accelerator initiative with Broadcom.

The primary outcome is not immediate supplier displacement, but rather more competitive pricing and an accelerated transition of suitable workloads to alternative solutions.

What It Means For Tech Stocks

Nvidia (NVDA): Equity exposure increases potential gains from OpenAI’s monetization, but also introduces private-valuation risk and underscores that major purchasers are negotiating more aggressively.

AMD (AMD): The partnership with OpenAI provides strategic validation, though revenue recognition is expected to be concentrated in late 2026 and subsequent periods.

Broadcom (AVGO): The combination of custom accelerators and Ethernet solutions positions AI networking as a sustainable growth driver, rather than merely a byproduct of GPU demand cycles.

Microsoft (MSFT) and Oracle (ORCL): OpenAI’s multi-cloud approach expands the total addressable market for AI hosting expenditures but diminishes the likelihood that any single provider will capture the majority of demand by default.

TSMC (TSM): More custom silicon and more GPU generations reinforce demand for leading-edge manufacturing and advanced packaging.

How To Frame The Trade In 2026

The market currently values tangible evidence of completed deployments over mere announcements. Four key indicators should be monitored: finalized terms for the $30 billion equity stake, capital expenditure guidance from hyperscalers, updates on lead times and pricing for next-generation accelerators, and the extent to which custom silicon transitions from pilot projects to large-scale production inference.

How To Trade Nvidia With EBC Financial Group

Nvidia represents a clear and liquid proxy for the AI infrastructure cycle, but it is also characterized by high volatility, particularly around earnings reports, product launches, and broader market downturns. EBC Financial Group offers access to Nvidia’s price movements through tools designed for active risk management, enabling traders to express directional views while mitigating potential losses.

Investors should begin by identifying the catalyst and investment time horizon, sizing positions conservatively and establishing risk limits prior to entry. While Nvidia’s trends may reward patience, its volatility can penalize excessive leverage. Defining a clear exit threshold is as important as setting a target.

Frequently Asked Questions

What Exactly Changed In The Nvidia OpenAI Deal?

The earlier framework tied up to $100 billion of Nvidia investment to gigawatt-scale deployments of Nvidia systems. The new structure centers on a roughly $30 billion direct equity investment in OpenAI, separating ownership from the mechanics of data center buildouts.

Does A Smaller Deal Mean OpenAI Will Buy Fewer Nvidia GPUs?

Not automatically. OpenAI’s disclosed infrastructure roadmap still includes deploying at least 10 GW of Nvidia systems, with the first 1 GW targeted for 2H 2026. The more important variable is timing: deployments determine when revenue and margins show up in results.

Which Stocks Have The Most Direct Read-Through?

NVIDIA and AMD have direct exposure through demand for accelerators. Broadcom benefits through custom accelerators and Ethernet networking. Microsoft and Oracle have greater indirect exposure through hosting capacity, enterprise distribution, and the network buildout associated with AI workloads.

Why Are These Deals Quoted In Gigawatts?

Gigawatts translate power availability into a proxy for data center scale. Multi-gigawatt commitments signal long-duration procurement of chips, networking, power equipment, and real estate, even if spending arrives in phases.

What Should Investors Watch Next?

Look for definitive deal documents, updates on 2H 2026 deployment schedules, and evidence that custom accelerators are being used for production inference at scale. Utilization and realized revenue will matter more than capacity headlines.

Conclusion

NVIDIA’s $30 billion strategic shift indicates a move toward greater financial discipline in the ongoing AI infrastructure expansion. Although the demand for computing power continues to accelerate, supplier competition is becoming more structured, and capital allocation is being streamlined. Within the technology sector, market leadership is likely to favor companies that effectively translate AI demand into sustainable cash flow while managing delivery schedules and pricing pressures.

Disclaimer: This material is for general information purposes only and is not intended as (and should not be considered to be) financial, investment or other advice on which reliance should be placed. No opinion given in the material constitutes a recommendation by EBC or the author that any particular investment, security, transaction or investment strategy is suitable for any specific person.